UK rules out new AI regulator

The UK government will not institute a new regulator to oversee the development of AI, fearing additional red tape could make the country less competitive internationally.

The UK government will not heed warnings from AI experts, including Tesla CEO Elon Musk, to adopt a rigorous regulatory framework to deal with emerging artificial intelligence technology. Instead, it will rely on “responsible use” guidelines to reduce red tape.

Artificial intelligence has grown in power tremendously over the last ten years. Recently, new large language models (LLMs) demonstrated an ability to pass the Turing test – widely considered a threshold for human-like intelligence. Now, industry leaders and academics worry unregulated technology could damage or end human civilisation, posing an “existential risk.”

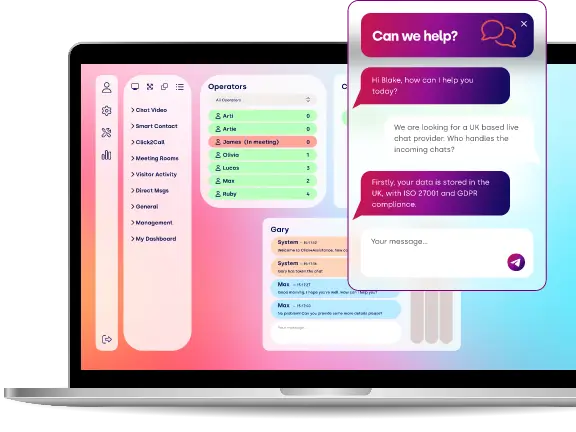

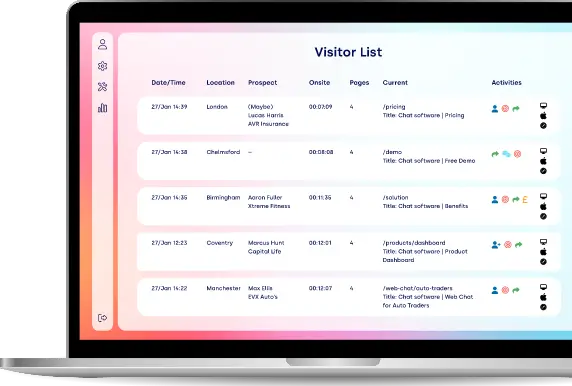

Deep learning-powered chatbots may already be changing the workplace. Systems can understand human queries and instructions written in natural language and produce various scripts and reports. Firms are rushing to learn how to put live chat on websites to take advantage of the technology and reduce costs.

Leading technologies include GPT-4 from OpenAI, a language model that understands context, nuance, and connections between concepts. Anyone can use these systems to create content and perform cognitive tasks, potentially undermining vast swathes of the white-collar economy.

Near-term, the risks are significant. Commentators fear AI technology could disrupt employment, entrench biases, and spread misinformation. Longer-term, experts worry computers could end humanity through deliberate extermination or through misspecified objectives.

A white paper from the Department for Science, Innovation, and Technology proposes rules for artificial general intelligence (AGI) systems, like ChatGPT, designed to perform a broad swathe of tasks.

Instead of giving responsibility to a single regulator, the UK government will hand the task over to existing regulators, including the Competition and Markets Authority and Human Rights Commission. These agencies can then adopt a suitable approach for dealing with AI in their respective fields. Regulators can only operate under existing laws and won’t get new powers.

The government is adopting this conservative approach because it fears what might happen to the industry if it toughens its stance. Companies may feel held back if they need to comply with odious rules when implementing AI systems.

Moreover, artificial intelligence proponents point out that the technology is already delivering numerous benefits, socially and economically. Implemented correctly, it has the potential to help UK productivity escape its decades-long malaise, improving living standards for everyone, they say.

Critics aren’t so sure. According to Michael Birtwistle, associate director from Ada Lovelace Institute, gaps in the new approach may pose risks. “Initially, the proposals in the white paper will lack any statutory footing,” he says. Developers won’t need to meet additional regulatory requirements, allowing development to continue unabated.

Simon Elliot, a partner at law firm Dentons, is even more damning, calling it a “light touch” that goes against global AI regulations. The UK could potentially become a pariah state, particularly now that London is the second-largest artificial intelligence hub in the world, outside Silicon Valley.

Over the coming years, the government will issue more practical guidance to organisations on abiding by the principles set out in the white paper. However, it did not commit itself to any formal regulation.