OpenAI announces ChatGPT successor GPT-4

GPT-4, the successor to ChatGPT, is here, offering a range of new abilities, including interpretation of video and images, short-term memory, and a better understanding of context.

If you thought ChatGPT 3.5’s capabilities were scary, just wait until you see what GPT-4 can do. The new system is an updated large multimodal model that can accept both image and text inputs and produce text outputs, building on the success of its predecessor. It’s a game-changer for anyone using a live chat tool for website communication.

What is GPT-4 and what can it do?

GPT-4 stands for Generative Pre-trained Transformer 4. It is a deep neural network that uses self-attention to learn from large amounts of data. However, it is substantially different from previous versions. Instead of relying solely on language, GPT-4 will also accept visual inputs such as images and videos, letting it perform tasks such as image captioning, visual question answering, and image generation.

It also has a bigger “backend” than GPT-3, comprising over 500 billion parameters compared to its predecessor’s 175 billion. This update means it will understand the context better and benefit from its own internal memory, remembering previous chats and building on them. For instance, GPT-4 can follow complex instructions in natural language, solve brain-twisting puzzles, write essays, generate code, create music, and more.

How does GPT-4 improve ChatGPT?

Despite its apparent supernatural powers, ChatGPT, based on OpenAI's GPT-3 large language model, had limitations. For instance:

- It could only handle text inputs and outputs.

- It sometimes produced unsafe or inappropriate responses.

- It had difficulty maintaining coherence over long conversations.

- It lacked creativity and originality.

Now, though, OpenAI is promising to change all this. GPT-4, it says, addresses these limitations by adding visual capabilities, improving safety mechanisms, increasing context length, and enhancing creativity.

For instance, GPT-4 is excellent at filtering out harmful content or content OpenAI wants to censor. It also has a short-term memory (like a human), remembering details from a previous conversation. Lastly, it can generate novel responses to questions, developing a kind of ersatz originality, thanks to the sheer volume of training data it can access.

How can I try GPT-4?

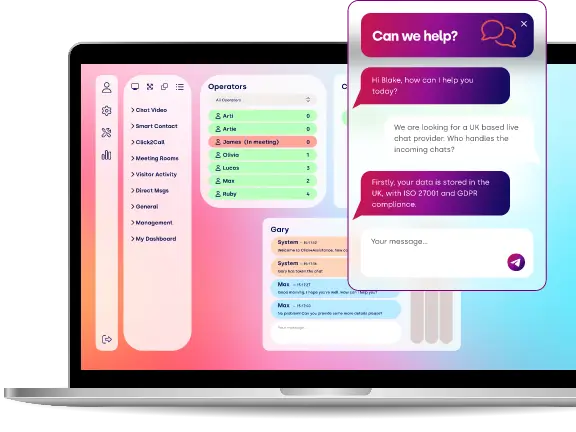

OpenAI has launched a new version of its Playground app called ChatGPT Plus, which allows users to try out GPT-4 for free. Users can choose from different scenarios, such as chatting with celebrities, playing games, getting advice or creating custom scenarios using images or text prompts. As such, it’s a bit like Click4Assistance’s live chat for website owners, where they can create their own conversational structure using natural language processing and curation.

OpenAI will also open its GPT-4 API to developers, letting them implement it in their projects. The API provides a simple interface to query the model with different parameters such as temperature (creativity), frequency penalty (repetition), presence penalty (boringness) and so on. The hope is that the new system will let users better tailor the AI experience to their clients, customers, or audience.

What are the implications of GPT-4?

The implications of GPT-4 are extreme. According to the BBC, there are concerns it could take thousands of jobs now done by humans. There are also worries about content, though OpenAI said it spent six months training the model to identify and iron out these issues. As with any new technology, it has the potential to wipe out some industries, and start others.