Consumer protection bodies urged to investigate ChatGPT

The European Consumer Organisation is calling on policymakers to investigate ChatGPT and similar technologies because of the harm they may cause consumers.

ChatGPT may soon face a regulatory backlash in Europe. The European Consumer Organisation (BEUC) is calling on EU consumer protection agencies to investigate the technology and its potential for harm. The move comes after several countries, including Italy, placed restrictions or outright bans on chatbot usage.

Since the start of 2023, government officials and regulatory bodies have been playing catch-up with advanced chatbots. Modern systems can now generate plausible-sounding text that some groups fear could harm consumers and wider society. Now there is a clear drive to restrict the use of these applications and place rules around what they can say.

The main player, ChatGPT (with GPT-4 technology), launched in November 2022. Since then, Bing introduced its ChatGPT-powered search facility, and Google launched its in-house tool, Bard. Other platforms, such as Facebook and Amazon, are following suit.

BEUC fears the plethora of high-performance, AI-powered chatbots will fundamentally change consumers’ relationship with the truth. In a world where advanced language models can generate plausible-sounding statements in seconds, it may become harder for people to distinguish fact from fiction. The organisation, which spans 32 countries and 46 consumer groups, set out its fears in a letter to the EU’s network of consumer protection and safety authorities.

The limitations of large language models (LLMs) are already well understood by the engineers and companies that work on them. These systems can “hallucinate” concepts, facts, and ideas, confidently espousing them, even if they are false.

Google’s introduction of Bard in February 2023 was a good example of this. During an advertisement, the company asked Bard, “What new discovery from the James Webb Space Telescope (JWST) can I tell my 9-year-old about?” Bard then incorrectly responded by claiming that the JWST provided the first-ever pictures of an exoplanet – a planet outside the solar system. In fact, the European Southern Observatory’s Very Large Telescope (VLT) took the first image of an exoplanet in 2004, more than 18 years prior.

There are countless other examples of LLM-based chatbots making similar mistakes. While these systems might produce beautiful prose, they can’t evaluate the facts that go with them. In many cases, their statements are outright false, and it isn’t clear how they arrived at such conclusions.

The BEUC fears that ChatGPT and similar technologies will distort consumers’ perceptions of reality by presenting false information that appears true. Such glitches are different from bias – another significant concern – in that they are innate. Bias generally only emerges after truthful interactions with humans and data, whereas hallucinations appear to be fundamental to generative AI techniques.

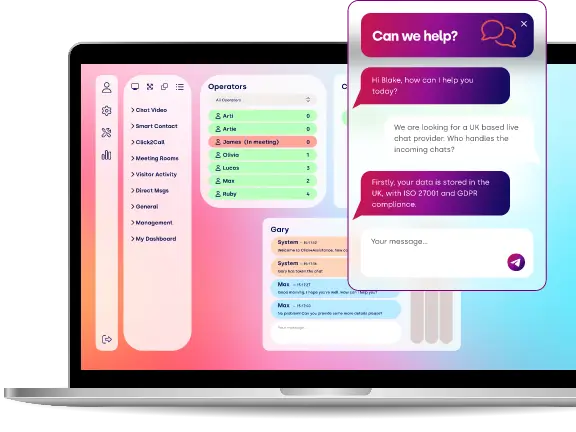

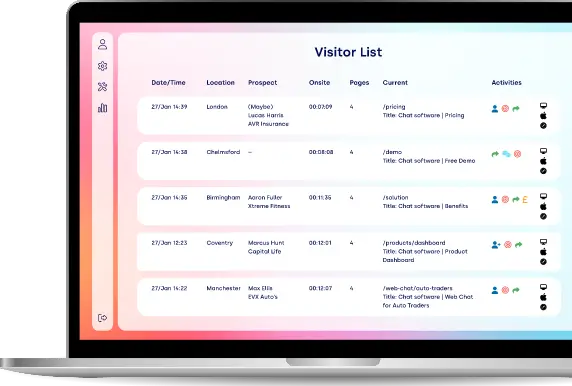

The BEUC’s letter does not seek to ban chatbots outright. However, it is calling for more regulatory scrutiny of this, as yet, unchallenged industry. Chatbots have the power to do great good as live chat software for website owners, but perhaps not society as a whole in their current form.